With the comments from the community, we just generated a new performance evaluation report for HBase 0.20.0. Please refer to following document.

We have been using HBase for around a year in our development and projects, from 0.17.x to 0.19.x. We and all of the community know the serious Performance/Throughput issue of these releases.

Now, the great news is that hbase-0.20.0 will be released soon. Jonathan Gray from Streamy, Ryan Rawson from StumbleUpon and Jean-Daniel Cryans had done a great job to rewrite many codes to enhance the performance. The two presentations [1][2] provide more details of this release.

Following items are very important for us:

- Insert performance: data generated fast.

- Scan performance: for data analysis by MapReduce.

- Random Access performance.

- The HFile (same as SSTable)

- Less memory and I/O overheads

Bellow is our evaluations on hbase-0.20.0 RC1:

Cluster:

- 5 slaves + 1 master

- Slaves (1-4): 4 CPU cores(2.0G), 800GB SATA disks, 8GB RAM. Slave(5): 8 CPU cores(2.0G) 6 disks with RAID1, 4GB RAM

- 1Gbps network, all nodes under the same switch.

- Hadoop-0.20.0, HBase-0.20.0, Zookeeper-3.2.0

We modified the org.apache.hadoop.hbase.PerformanceEvaluation since the code have following problems:

- Is not match for hadoop-0.20.0.

- The approach to split map is not strict. Need provide correct InputSplit and InputFormat classes.

The evaluation programs use MapReduce to do parallel operations against HBase table.

- Total rows: 5,242,850.

- Row size: 1000 bytes for value, and 10 bytes for rowkey.

- Sequential ranges: 50. (also used to define the total number of MapTasks in each evaluation)

- Each Sequential Range rows: 104,857

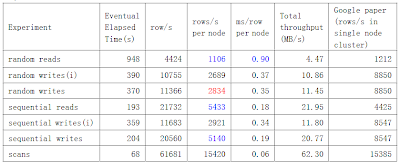

The principle is same as the evaluation programs described in Section 7, Performance Evaluation, of the Google Bigtable paper[3], pages 8-10. Since we have only 5 nodes to work clients, we set mapred.tasktracker.map.tasks.maximum=3 to avoid client side bottleneck.

randomWrite (init) and sequentialWrite (init) are evaluations against a new table. Since there is only one RegionServer is accessed at the beginning, the performance is not so good. randomWrite and sequentialWrite are evaluations against a existing table that is already distributed on all 5 nodes.

randomWrite (init) and sequentialWrite (init) are evaluations against a new table. Since there is only one RegionServer is accessed at the beginning, the performance is not so good. randomWrite and sequentialWrite are evaluations against a existing table that is already distributed on all 5 nodes.Compares to the metrics in Google Paper (Figure 6): The write and randomRead performance is still not so good, but this result is much better than any previous HBase release, especially the randomRead. We even got better result than the paper on sequentialRead and scan evaluations. (and we should be aware of that the paper was published in 2006). This result gives us confidence.

- The new HFile should be the major success.

- BlockCache provide more performance to sequentialRead and scan.

- Client side write-buffer accelerates the sequentialWrite, but not so distinct. Since each write operation always writes into commit-log file and memstore.

- randomRead performance is not good enough, maybe bloom filter shall enhance it in the future.

- scan is so fast, MapReduce analysis on HBase table will be efficient.

Looking forward to and researching following features:

- Bloom Filter to accelerate randomRead.

- Bulk-load.

We need do more analysis for this evaluation and read code detail. Here is our PerformanceEvaluation code: http://dl.getdropbox.com/u/24074/code/PerformanceEvaluation.java

References:

[1] Ryan Rawson’s Presentation on NOSQL. http://blog.oskarsson.nu/2009/06/nosql-debrief.html

[2] HBase goes Realtime, http://wiki.apache.org/hadoop-data/attachments/HBase(2f)HBasePresentations/attachments/HBase_Goes_Realtime.pdf

[3] Google, Bigtable: A Distributed Storage System for Structured Data http://labs.google.com/papers/bigtable.html

Anty Rao and Schubert Zhang